Real people want positive web experiences. In practice, how does that look?

In fact, according to a recent study cited by Google in a blog post about Core Web Vitals, mobile web users only pay attention to the screen for 4-8 seconds at a time.

Reread that sentence.

You only have 8 seconds to deliver interactive content and get a user to complete a task.

Enter Core Web Vitals here (CWV). These three metrics are intended to assess site performance in terms of human experience. The metrics were announced by the open-source Chromium project in early May 2020, and they were quickly adopted across Google products.

How do we define performance in user-centric metrics?

- Is it starting to load?

- Can I interact with you?

- Is it aesthetically stable?

Core Web Vitals fundamentally measure how long it takes to complete the script functions required to paint the above-the-fold content. A 360 x 640 viewport serves as the arena for these Herculean tasks. It’s small enough to fit in your pocket!

This war-drum for unresolved tech debt is a boon to many product owners and tech SEO professionals who have been pushed aside in favor of new features and flashy baubles.

Will the Page Experience update be Mobileggedon 4.0?

Most likely not.

Users are 24 percent less likely to abandon page loads when your page passes CWV assessments. These efforts benefit every source and medium, as well as, most importantly, real people.

Page Experience Improvements

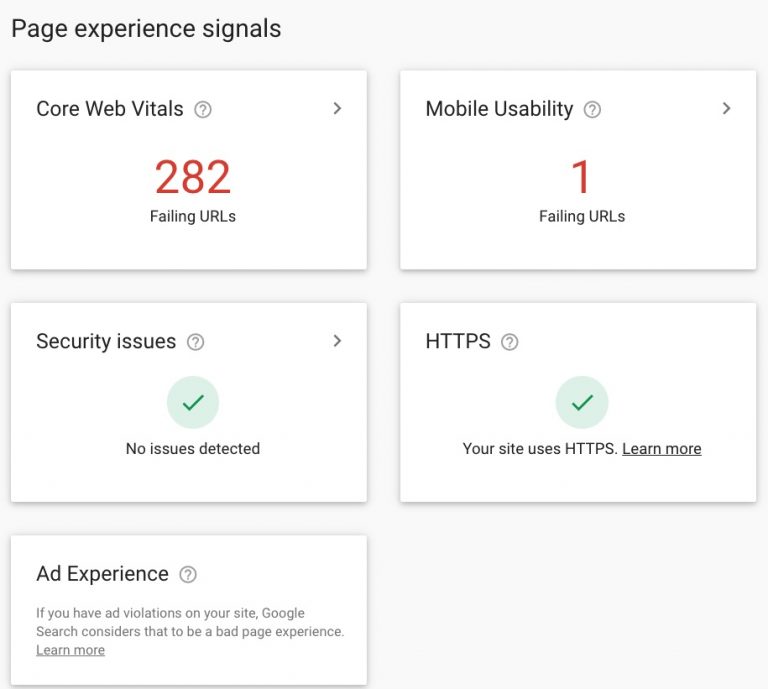

Despite the hype, CWV will be used in a ranking signal. The Page Experience Ranking, which is expected to be released gradually from mid-June to August 2021, will include:

- Web Essentials.

- The most contented paint.

- Delay in the first input.

- Stability of vision.

- Mobile-Friendly.

- Browse with caution.

- HTTPS.

There are no intrusive interstitials

The documentation has been updated to state that the rollout will be gradual and that “sites should not expect drastic changes.”

Important information about the update:

- Each URL’s Page Experience is evaluated.

- The page experience is built around a mobile browser.

- Top Stories carousels no longer require AMP.

- It is not necessary to pass the CWV in order to appear in the Top Stories carousels.

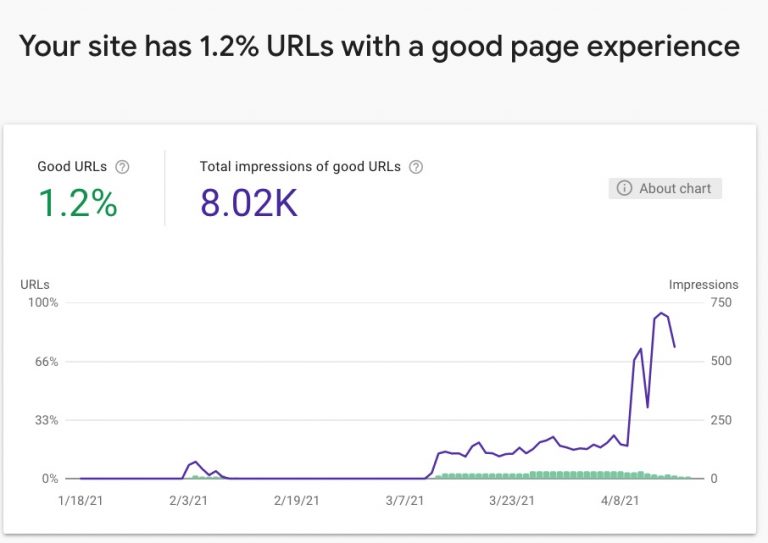

Report on a New Page Experience In Google Search Console

A Page Experience report is now available in Search Console. Backdated data for the last 90 days is included in the new resource.

A URL must meet the following criteria to be considered “Good”:

- In the Core Web Vitals report, the URL is rated as Good.

- According to the Mobile Usability report, the URL has no mobile usability issues.

- There are no security concerns with the site.

- HTTPS is used to serve the URL.

- The site has no Ad Experience issues, or the site was not Ad Experience tested.

The new report includes high-level widgets that connect to reports for each of the five “Good” criteria.

Workflow for Diagnosing and Implementing CWV Enhancements

First, an important note about field vs lab data.

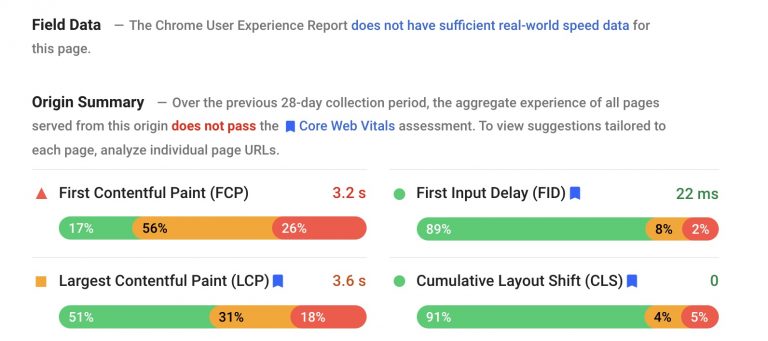

Field Data is performance data gathered from real-world page loads experienced by your users. Field Data may also be referred to as Real User Monitoring.

The Chrome User Experience Report Field Data will be used in Core Web Vitals assessments and the Page Experience Ranking Signal (Crux).

What Users Are Included in the Chrome User Experience Report?

Crux data is compiled from users who meet three criteria:

- The user agreed to have their browsing history synced.

- The user has not configured a Sync pass.

- The user has enabled usage statistics reporting.

- Crux is your go-to resource for Core Web Vitals Assessment.

Crux data can be accessed via Google Search Console, PageSpeed Insights (page-level), the Public Google BigQuery project, or as an origin-level dashboard in Google Data Studio.

What makes you think you’d use something else? CWV Field Data, on the other hand, is a limited set of metrics with limited debugging capabilities and data availability requirements.

Why isn’t Crux data available on my page?

When you test your page, you may see the message “The Chrome User Experience Report does not have enough real-world speed data for this page.”

This is due to the anonymization of Crux data. There must be enough page loads to report without the possibility of identifying the individual user.

Web Core Vitals are best identified in the field and then diagnosed/QAed in the lab.

Lab Data enables you to debug performance by providing end-to-end visibility into UX. The term “lab” refers to the fact that this simulated data is collected in a controlled environment with the predefined device and network settings.

PageSpeed Insights, web.dev/measure, Chrome DevTools’ Lighthouse panel, and Chromium-based crawlers such as a local NodeJS Lighthouse or DeepCrawl can all provide lab data.

Let’s take a look at a workflow process.

Read An Introduction To Python & Machine Learning For Technical SEO.

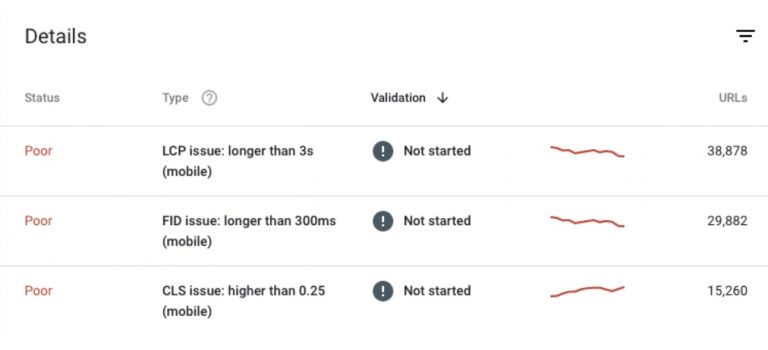

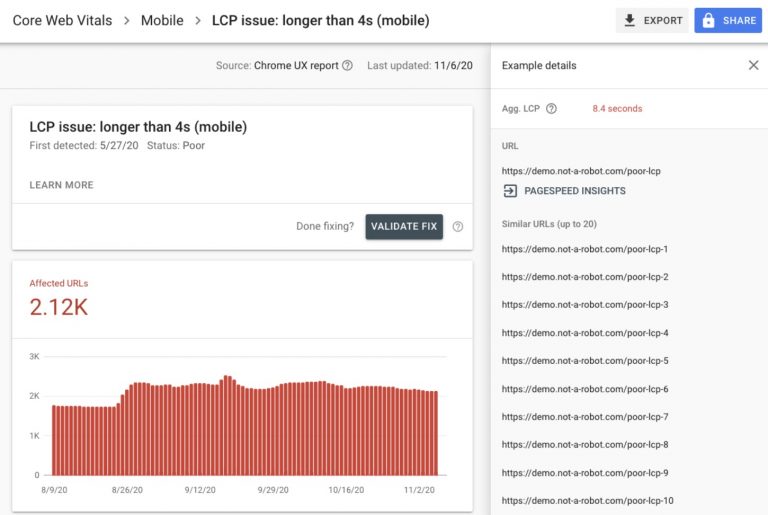

1. Identify Issues in Search Console Using Crux Data Grouped By Behavior Patterns

Begin with the Core Web Vitals report in Search Console to identify groups of pages that require attention. This data set makes use of Crux data and groups together example URLs based on behavior patterns.

If you fix the root problem on one page, you’re likely to fix it on all pages that have the same CWV problem. These issues are typically shared by a template, CMS instance, or on-page element. GSC will do the grouping for you.

Concentrate on mobile data, as Google is transitioning to a mobile-first index and CWV is expected to impact mobile SERPs. Prioritize your efforts based on the number of URLs that have been affected.

Click into an issue to see examples of URLs that exhibit the same patterns of behavior.

Save these example URLs for testing throughout the process of improvement.

2. Combine field data with lab diagnostics using PageSpeed Insights

After you’ve identified the pages that require attention, use PageSpeed Insights (powered by Lighthouse and Chrome UX Report) to diagnose lab and field issues on a page.

Keep in mind that lab tests are one-time emulated tests. One test is not a reliable source of information or a final answer. Experiment with various example URLs.

PageSpeed Insights can only be used to test URLs that are publicly available and indexable.

Crux data is available via API or BigQuery if you’re working on noindex or authenticated pages. Lighthouse should be used in lab tests.

3. Make a Ticket. Carry out the development work.

As SEO professionals, I encourage you to participate in the ticket refinement and QA processes.

Sprints are commonly used by development teams. Each sprint has a set number of tickets. With well-written tickets, your development team can better size the effort and get the ticket into a sprint.

Include the following in your tickets:

User Story

Follow a simple format:

As a < type of user/site/etc >, I want < action > in order to < goal >

For example, as a performant site, I want to include inline CSS for node X on page template Y to achieve the largest contentful paint for this page template in less than 2.5 seconds.

Criteria for Acceptance

Determine when the goal has been met. What exactly does “done” mean? Eg.:

- Include any critical-path CSS used for above-the-fold content directly in the head>.

- In the head>, critical CSS (that is, CSS related to node X) appears above JS and CSS resource links.

URL/Strategy Testing

Include the grouped example URLs from Search Console that you copied. Provide QA engineers with a set of steps to follow.

Consider the tool used, the metric/marker to look for, and the behavior that indicates a pass or fail.

Developer Document Links

When available, use first-party documentation. No fluffy blogs, please. Please? Eg.:

4. QA Lighthouse-Enabled Changes in Staging Environments

Before code is pushed to production, it is frequently tested in a staging environment. To measure Core Web Vitals, use Lighthouse (via Chrome DevTools or a local node instance).

If you’re new to Lighthouse testing, A Technical SEO Guide to Lighthouse Performance Metrics can help you learn about how to test and testing methodology.

Remember that lower environments typically have fewer resources and are less performant than production environments.

Use the acceptance criteria to determine whether the development work completed met the task requirements.

Largest Contentful Paint

Represents: Loading Experience Perceived

Measurement: The point in the page load timeline at which the largest image or text block on the page is visible within the viewport.

Key Behaviors: Pages that use the same page templates typically share the same LCP node.

Goal: The goal is for 75% of page loads to achieve LCP in 2.5 seconds or less.

Available as: There are two types of data available: lab data and field data.

What Exactly Is LCP?

The LCP metric determines when the viewport’s largest text or image element is visible.

Elements that can serve as a page’s LCP node include:

- image> elements contained within an svg> tag.

- Images of video> elements used as posters.

- Background images are loaded using the url() CSS function.

- Text nodes contained within block-level elements.

- In future iterations, expect to see elements like svg> and video> added.

Using Chrome DevTools to Identify LCP

- Open the page in Chrome as if it were a Moto 4G.

- Navigate to the Dev Tools Performance panel (Command + Option + I on a Mac or Control + Shift + I on Windows and Linux).

- In the Timings section, hover your mouse over the LCP marker.

- The Related Node field contains information about the element(s) that correspond to LCP.

What Factors Contribute to Low LCP?

Poor LCP is caused by four common issues:

- Server response times are slow.

- JavaScript and CSS are rendering-blocking.

- Slow loading of resources.

- Client-side rendering

At best, source issues for LCP are painted in broad strokes. Unfortunately, none of the above phrases are likely to be sufficient to pass along to your development team with meaningful results.

However, you can give the issue traction by focusing on which of the four origins is at work.

Improving LCP will require collaboration. Fixing it entails attending dev updates and following up as a stakeholder.

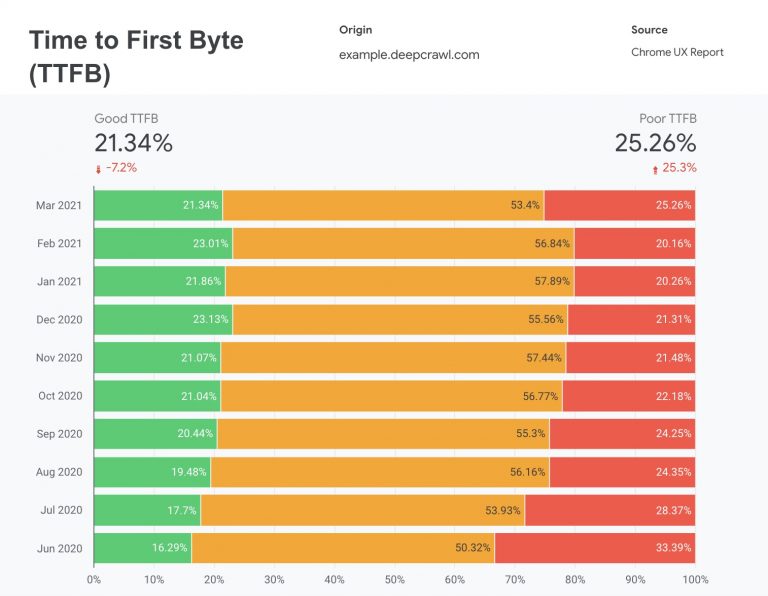

Poor LCP Diagnosis Due To Slow Server Response Time

If you notice consistently low TTFB in your field data, it’s likely that slow server response time is dragging LCP.

Try free Plagiarism Checker, Paraphrasing Tool, and Website Reviewer.

How to Improve a Slow Server Response Time

Server response time is determined by a variety of factors unique to the site’s technology stack. There are no silver bullets in this situation. Your best bet is to open a support ticket with your development team.

TTFB could be improved in the following ways:

- Improve the server’s performance.

- Users should be directed to a nearby CDN.

- Assets should be cached.

- Cache-first HTML pages are served.

- Make third-party connections early on.

Poor LCP Diagnosis Due To Render-Blocking JavaScript And CSS

Lighthouse (via web.dev/measure, Chrome DevTools, Pagespeed Insights, or a nodeJS instance) is where to look. Each of the solutions listed below includes an audit flag.

How to Resolve Render-Blocking CSS

CSS is render-blocking by nature and has an impact on critical rendering path performance. CSS is treated as a render-blocking resource by default.

Regardless of blocking or non-blocking behavior, the browser downloads all CSS resources.

CSS should be minimized.

If your site makes use of a module bundler or build tool, look for a plugin that will systematically reduce the scripts.

Non-critical CSS should be deferred.

The DevTools Code Coverage report will assist you in determining which styles are used on the page. Remove it entirely if it isn’t used on any pages. (Don’t judge; CSS files can quickly fill up the proverbial junk drawer.)

If the styles are used on another page, create a separate style sheet for those pages.

Critical CSS should be inlined.

Include the critical-path CSS for above-the-fold content (as determined by the Code Coverage report) directly in the head>.

Make use of Dynamic Media Queries.

When applied to CSS styles, media queries are simple filters that separate the styles based on the type of device rendering the content.

Instead of calculating styles for all viewports, dynamic media queries call and calculate those values for the requesting viewport.

How to Resolve Render-Blocking JavaScript Issues

JavaScript files can be minified and compressed.

To minify and compress network payloads, you’ll need to collaborate with developers.

Minification is the process of removing unnecessary whitespace and code. It’s best to do it step by step with a JavaScript compression tool.

Compression entails algorithmically modifying the data format to allow for more efficient server and client interactions.

Remove any unused JavaScript.

Code splitting divides large chunks of JS into smaller packages. You can then prioritize those that are relevant to above-the-fold content.

Reduce the number of unused polyfills.

Remember how Googlebot got stuck in Chrome 44 for what seemed like an eternity? A polyfill is a piece of code that is used to provide modern functionality on older browsers that do not support it natively.

Now that Googlebot is evergreen, it is also known as tech debt.

Some compilers include functionality for removing legacy polyfills.

How to Fix Render-Blocking Third-Party Scripts

Delay it

Use async or defer attributes if the script does not contribute to above-the-fold content.

Take it out.

Remove any iframe> tags from the script’s head. Please contact the vendor for the most recent implementation method.

It should be consolidated.

Examine the use of third-party scripts. Who is in charge of the instrument? A third-party tool that is not managed by someone is also referred to as a liability.

What kind of value does it provide? Is that worth more than the impact on performance? Is it possible to achieve the desired result by combining tools?

It should be updated.

Another option is to contact the provider and see if they have a new lean or asynchronous version. Sometimes they do and don’t tell people who are still using their old implementation.

Poor LCP Diagnosis Due To Slow Resource Load Times

Lighthouse (via web.dev/measure, Chrome DevTools, Pagespeed Insights, or a nodeJS instance) is where to look. Each of the solutions listed below includes an audit flag.

Browsers retrieve and execute resources as they come across them. Our paths to discovery aren’t always smooth. Occasionally, the resources are not optimized for their on-page experiences.

Here are some strategies for dealing with the most common causes of slow resource load times:

- Image optimization and compression

Nobody requires a 10MB png file. There is almost never a reason to send a large image file. Alternatively, a png. - Important resources should be preloaded

If a resource is in the critical path, a simple rel=”preload” attribute instructs the browser to retrieve it as soon as possible. - Text files can be compressed

Encode, compress, and then repeat. - Depending on the network connection, deliver different assets (adaptive serving)

A mobile device connected to a 4G network is unlikely to require/want/tolerate the loading time of assets optimized for an ultra 4K monitor. Use the Network Information API, which allows web applications to access network information for users. - Using a service worker, cache assets

While Googlebot does not execute service workers, a user’s device connected to a thimble’s worth of network will. Use the Cache Storage API in collaboration with your development team.

Poor LCP Diagnosis Due to Client-Side Rendering

Where to look: For quick glances, look at the page source. If it’s a couple of lines of gibberish, the page is rendered client-side.

Client-side rendering is possible for elements on a page. Compare the original page source to the rendered HTML to determine which elements are present. Compare the rendered word count difference if you’re using a crawler.

Core Web Vitals are a way for us to assess the efficacy of our rendering strategies.

Although all rendering options produce the same output (web pages), CWV metrics measure how quickly we deliver what matters when it matters.

Client-side rendering is rarely the answer unless the question is, “What changes were implemented at the same time that organic traffic began to decline?”

How to Fix Client-Side Rendering

“Stop” isn’t a useful response. Although accurate, this information is ineffective. Instead, try this:

- Reduce the amount of critical JavaScript

For above-the-fold functionality, use code splitting, tree shaking, and inline functions in the head. Keep inline scripts to 1kb. - Make use of server-side rendering

You can return fully rendered HTML by having your servers execute JS elements. It is important to note that this will increase your TTFB because the scripts will be executed before your server responds. - Make use of pre-rendering

Execute your scripts at build time to have rendered HTML ready for incoming requests. This option improves server response time but is ineffective for sites with frequently changing inventory or prices.

To be clear, dynamic rendering is not a replacement for client-side rendering. It makes the difficulties of client-side rendering a friend.

First Input Delay (FID)

Time elapsed between when a user first interacts with a page and when the browser is able to begin processing event handlers in response to that interaction.

Key behaviors: FID is only available in the field.

The goal is for 75% of page loads to achieve FID in less than 100 milliseconds.

Field Data is an option.

For lab tests, use Total Blocking Time (TBT)

Because FID is only available as lab data, Total Blocking Time must be used for lab tests. With different thresholds, the two achieve the same end result.

TBT stands for: User input responsiveness.

TBT measurement: The amount of time that the main thread is occupied by tasks that take more than 50ms to complete.

Goal: 300 milliseconds.

Available as: Lab Data

What Factors Contribute to Poor FID?

const jsHeavy = true;

While (jsHeavy) {

console.log("FID fail")

}JavaScript is used extensively. That’s all.

Poor FID is caused by JS occupying the main thread, which means your user’s interactions must wait.

What Elements of the Page Are Affected by FID?

FID is a method of determining main thread activity. In-progress tasks on the main thread must be completed before on-page elements can respond to user interaction.

Here are some of the most common elements that your frustrated user is tapping:

- Text fields.

- Checkboxes.

- Radio buttons (

<input>and<textarea>). - Select dropdowns (

<select>). - Links (

<a>).

What to look for: Look at Crux Dashboard v2 – First Input Delay (FID) to confirm it’s a problem for users (page 3). To identify the specific tasks, use Chrome DevTools.

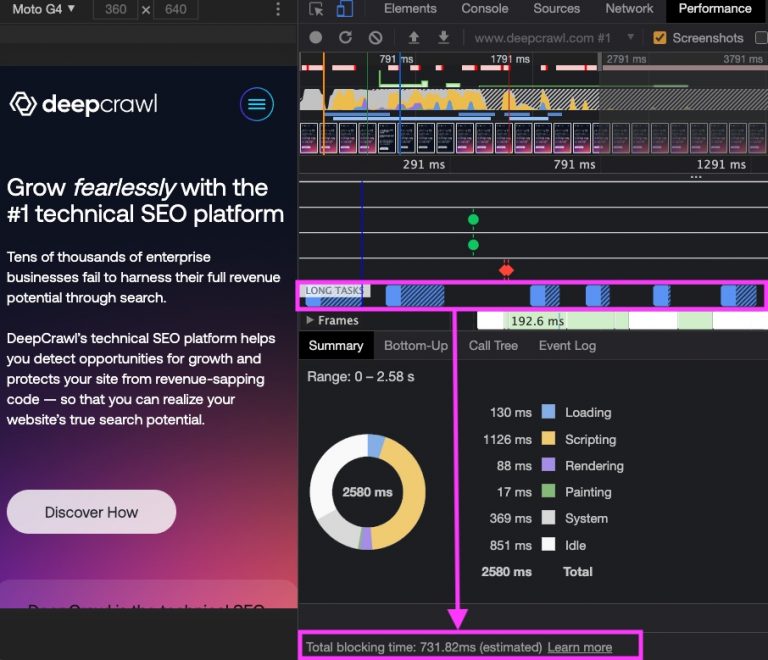

How to Use Chrome DevTools to View TBT

- Launch Chrome and navigate to the page.

- Navigate to the Dev Tools Network panel (Command + Option + I on Mac or Control + Shift + I on Windows and Linux).

- To disable cache, check the box.

- Go to the Performance Panel and check the Web Vitals box.

- To begin a performance trace, click the reload button.

- In the right-hand corner of tasks, look for the blue blocks labeled Long Tasks or the red right corner markers. These are long tasks that took more than 50 milliseconds.

- The TBT for the page can be found at the bottom of the summary.

How to Repair a Bad FID

Stop allowing so many third-party scripts to be loaded.

Because of third-party code, your performance lags behind that of another team’s stack.

In order for your side to be considered performant, you rely on their scripts executing in a concise, performant manner.

Breaking up Long Tasks frees up the main thread.

If you ship a single large JS bundle for each page, there will be many functionalities in that bundle that do not contribute to the page.

Regardless of whether they contribute or not, each JS function must be downloaded, parsed, compiled, and executed.

You can free up the main thread by breaking up that large bundle into smaller chunks and only shipping those who contribute.

Examine your tag manager.

Tag deployment out-of-the-box fires event listeners, tying up your main thread.

Long-running input handlers that prevent scrolling can be used as tag managers. Debounce your input handlers with the help of developers.

Improve the interaction readiness of your page.

Ship and execute those JS bundles in the correct order.

Is it visible above the fold? It is given top priority. Use the rel=preload attribute.

It’s important, but not enough to stymie rendering. Include the async attribute.

What’s below the fold? Use the defer attribute to postpone it.

Make use of a web worker.

Web workers allow JavaScript to run in the background rather than on the main thread on which your FID is scored.

JavaScript execution time should be reduced.

If you ship a single large JS bundle for each page, there will be many functionalities in that bundle that do not contribute to the page.

Regardless of whether they contribute or not, each JS function must be downloaded, parsed, compiled, and executed.

You can free up the main thread by breaking up that large bundle into smaller chunks (code splitting) and only shipping those that contribute (tree shaking).

Cumulative Layout Shift.

Measurement: A calculation based on the number of frames in which the element(s) moves visually and the total distance in pixels moved by the element(s).

layout shift score = impact fraction * distance fraction

CLS is the only Core Web Vital that is not measured in time. CLS, on the other hand, is a calculated metric. The precise calculations are being iterated on.

The goal is for 75% of page loads to have a CLS calculated metric of greater than 0.10.

There are two types of data available: lab data and field data.

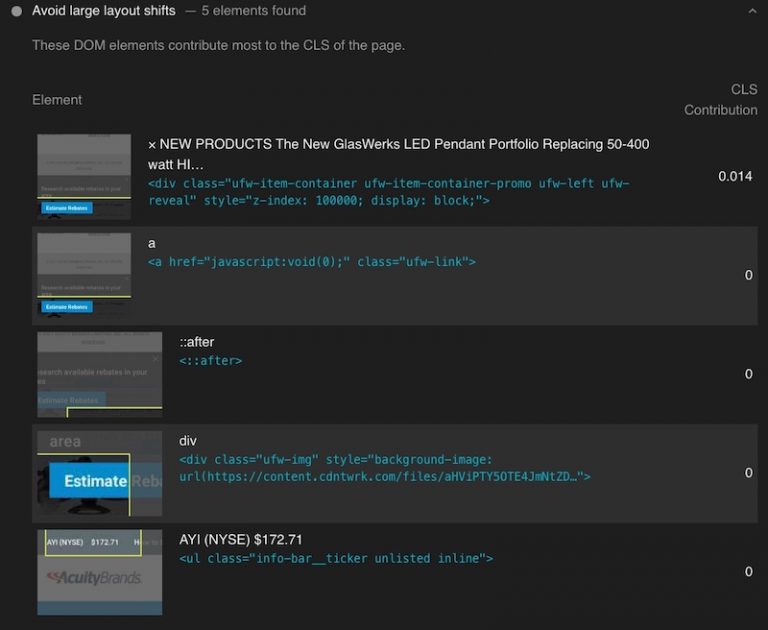

Poor CLS Diagnosis

What to look for: Look at Crux Dashboard v2 – Cumulative Layout Shift (CLS) to confirm it’s a problem for users (page 4). To identify the bouncing element, use any tool with Lighthouse (s).

Chrome DevTools will provide more information about the excitable element’s coordinates and how many times it moves.

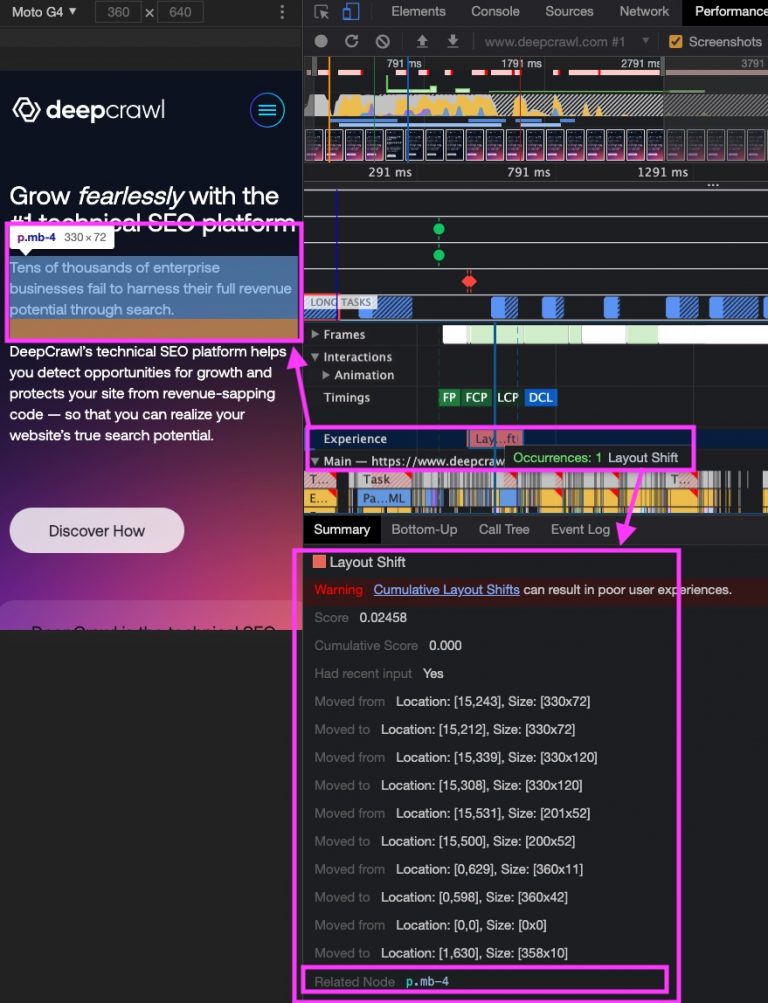

Using Chrome DevTools to View CLS

- Launch Chrome and navigate to the page.

- Navigate to the Dev Tools Network panel (Command + Option + I on Mac or Control + Shift + I on Windows and Linux).

- To disable cache, check the box.

- Go to the Performance Panel and check the Web Vitals box.

- To begin a performance trace, click the reload button.

- In the Experience section, click on the red marker(s).

- Look for the node’s name, highlighting on the page, and coordinates for each time the element moved.

What Is Included in CLS?

If an element appears in the first viewport, it is included in the metric’s calculation.

If you load your footer before your primary content and it appears in the viewport, the footer is included in your (likely mediocre) CLS score.

What Factors Contribute to Low CLS?

Is this your cookie notification? It’s most likely your cookie notice.

Alternatively, search for:

- Images that lack dimensions.

- Advertisements, embeds, and iframes with no dimensions.

- Content that is injected dynamically.

- FOIT/FOUT caused by web fonts

- Critical resource supply chains

- Before updating the DOM, actions wait for a network response.

How to Improve a Low CLS

On images and video elements, always include width and height size attributes.

It’s as easy as img src=”stable-layout.jpg” width=”640″ height=”360″ />, but it’s also not. The use of height and width declarations has decreased in responsive web design. The negative effect of this is that pages reflow after the image appears on screen.

The best practice is to use user-agent stylesheets for systematically declared dimensions based on the aspect ratio of the image.

Make room for ad slots (but don’t collapse it).

If you’re a publishing site, you’ll never win an argument about the negative impact of third-party ads on performance.

Instead, determine the largest ad size that could be used in a slot and reserve space for it. Keep the placeholder if the ad does not populate. The gap is preferable to a layout change.

Inserting new content above existing content should be avoided.

A fighting element should not enter the arena unless it is ready to be counted.

When placing non-sticky ads near the top of the viewport, use caution.

Avoid ads near the top of the page as a general rule. Those will most likely be highlighted in the new GSC Page experience report.

Fonts and critical resources should be preloaded.

A late font loading results in a full flash and re-write.

Preload informs the browser that you want to fetch it sooner than the browser would normally discover it because you are certain that it is important for the current page.

href=”/assets/Pacifico-Bold.woff2″ as=”font” type=”font/woff2″ crossorigin> link rel=”preload” href=”/assets/Pacifico-Bold.woff2″ as=”font” type=”font/woff2″ crossorigin>

Avoid using chains to get the resources you need to create above-the-fold content.

When you call a resource that calls another resource, a chain is formed. If a critical asset is invoked by a script, it cannot be invoked again until the script is executed.

Avoid using document.write ()

Speculative parsing off the main thread is supported by modern browsers.

They work ahead while scripts are downloaded and executed, similar to reading ahead of class assignments. document. write() enters and modifies the textbook. Reading ahead at work was no longer useful.

Most likely, this isn’t the work of your developers. Speak with your “magic” third-party tool’s point of contact.

CWV Metrics in the Future

Google plans to update the Page Experience components once a year. Future CWV metrics will be documented in the same way that the initial signal rollout was.

Imagine a world where SEO professionals knew about Panda’s impending arrival a year in advance!

Core Web Vitals already account for 55% of your Lighthouse v7 score.

Largest Contentful Paint (LCP) and First Input Delay (FID) are currently weighted at 25% each. The cumulative layout shift is a meager 5%, but we can expect these to equalize.

Once the Chromium team has honed the metric’s calculation, the smart money is on Q4 2021.

As technical SEO experts, we can diagnose and recommend solutions for a better user experience. The thing is, those investments and improvements have an impact on all users.

ROI can be found in any medium. Every single channel.

Organic performance reflects the overall health of the site. Use that position to your advantage as you continue to advocate and iterate. Share what you discover.

The most important point:

| ̄ ̄ ̄ ̄ ̄ ̄ ̄ ̄ ̄ ̄ ̄|

Don’t be afraid to express yourself.

find out in public

|___________|

|| ( /)

(((((((((((((((((((((

/ formalized

Learn more from SEO and read How to Improve Your SEO Content Strategy with Keyword Clusters.